ggml.ai

About ggml.ai

ggml.ai is a revolutionary tensor library that enhances machine learning with high performance on commodity hardware. Targeting developers and researchers, ggml.ai simplifies the deployment of large models without third-party dependencies, making on-device inference easier while retaining powerful capabilities and versatility.

ggml.ai offers a flexible pricing model, including free access under the MIT license. Users can support contributors via sponsorship options, incentivizing innovation. Upgrading benefits users with enhanced project collaboration, exclusive extensions, and priority access to new features, ensuring they remain at the forefront of AI development.

The user interface of ggml.ai is designed for simplicity and efficiency, creating an intuitive browsing experience for developers. Featuring clear navigation, essential tools readily available, and minimal distractions, ggml.ai prioritizes user-friendly design to facilitate effortless access to powerful machine learning capabilities.

How ggml.ai works

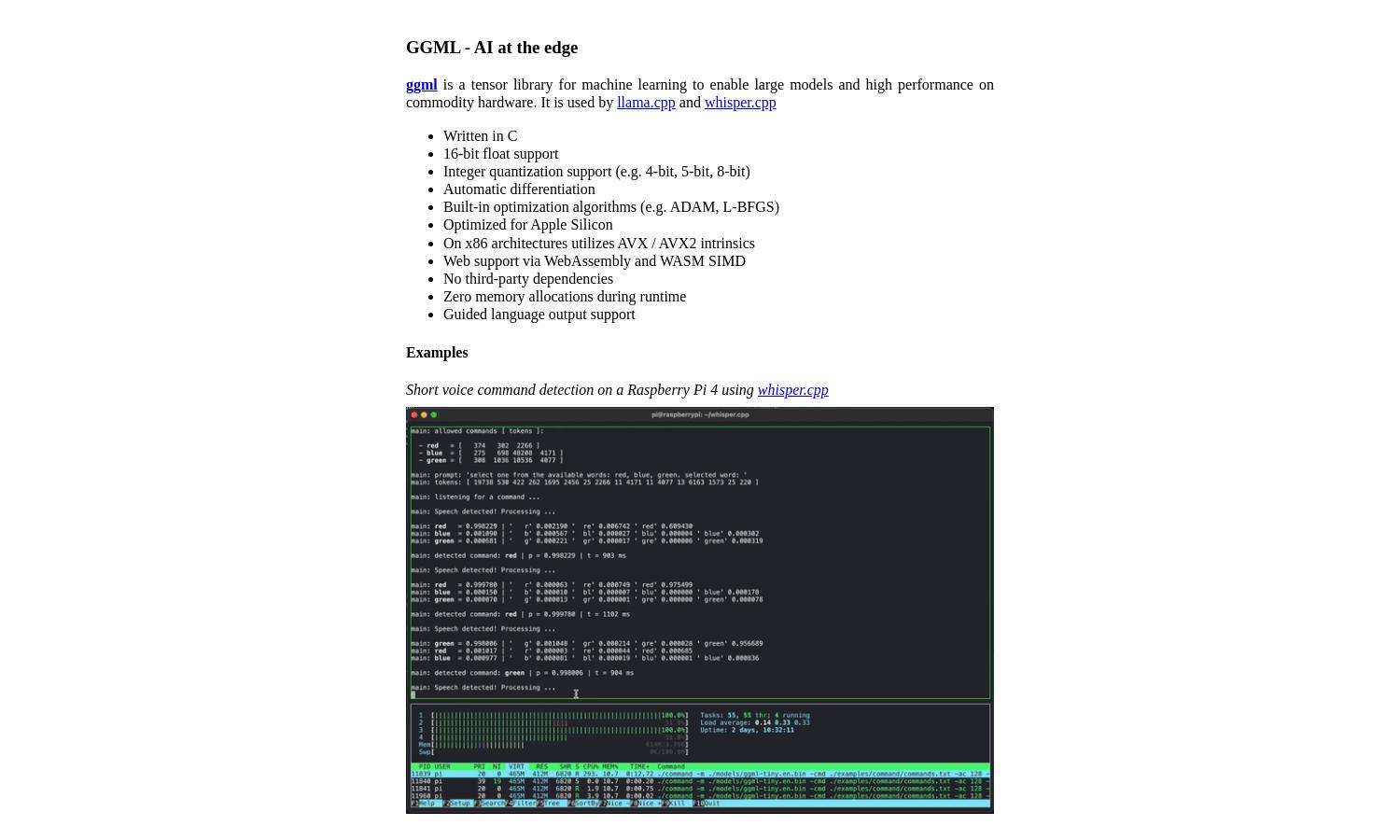

Users of ggml.ai can start by visiting the website to download the tensor library and access comprehensive documentation. Following an easy onboarding process, developers can integrate the library into their projects, utilizing features like integer quantization and automatic differentiation. With a supportive community, users can contribute and explore innovative ideas, enhancing their machine learning applications effectively.

Key Features for ggml.ai

High-performance inference

High-performance inference is a key feature of ggml.ai, enabling developers to leverage advanced AI models efficiently. By providing optimized tensor operations across various hardware, ggml.ai ensures swift execution and scalability, making it ideal for applications requiring real-time processing and robust performance.

Integer quantization support

Integer quantization support in ggml.ai allows developers to significantly reduce model size while maintaining performance. This key feature optimizes the deployment of machine learning models on limited-resource devices, expanding their usability across desktops, mobile phones, and embedded systems, enhancing accessibility and efficiency.

Automatic differentiation

Automatic differentiation in ggml.ai streamlines the development of complex machine learning models. This feature provides precise gradient computation, allowing users to easily implement advanced training algorithms. It enhances the capability of ggml.ai for researchers and developers, promoting quicker experimentation and innovation in AI projects.

You may also like: