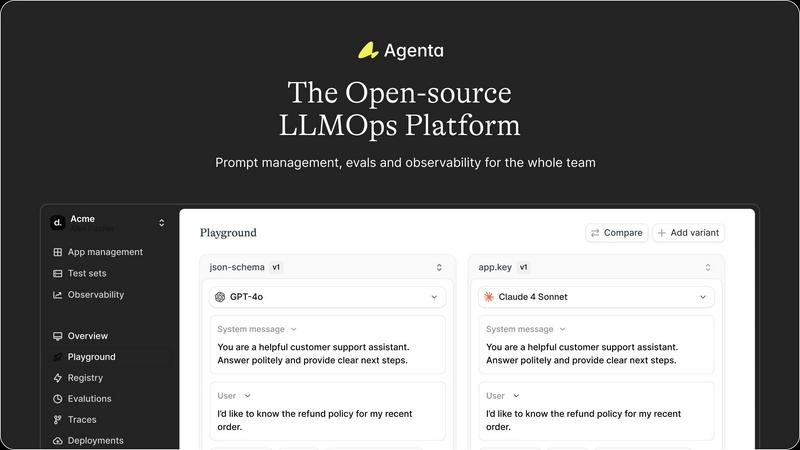

Agenta

Agenta is an open-source LLMOps platform that streamlines AI app development with prompt management, evaluations, and...

Visit

About Agenta

Agenta is an innovative open-source LLMOps platform designed to empower AI teams in developing and deploying reliable large language model (LLM) applications. Built for developers and subject matter experts, Agenta streamlines the collaborative process of experimenting with prompts, running evaluations, and debugging production issues. The platform addresses the inherent unpredictability of LLMs, which can lead to scattered workflows and siloed teams. With Agenta, teams can centralize their LLM development processes, ensuring that they follow best practices for prompt management, evaluation, and observability. By providing a structured environment for quick iteration and feedback, Agenta transforms the way AI teams work, enabling them to enhance performance, reduce guesswork in debugging, and ultimately ship reliable AI applications faster.

Features of Agenta

Centralized Workflow Management

Agenta centralizes the entire LLM development workflow in one platform, allowing teams to keep their prompts, evaluations, and traces organized. This prevents the chaos of scattered tools and fosters collaboration among team members.

Unified Playground

The unified playground feature enables teams to experiment with prompts and models side-by-side. Users can compare various approaches, track version history, and utilize real production data for debugging, making it easier to identify the best-performing prompts.

Automated Evaluations

Replace guesswork with automated evaluations through Agenta's sophisticated evaluation system. Teams can integrate LLM-as-a-judge, utilize built-in evaluators, or deploy their own custom evaluation code to systematically validate every change made during the development process.

Observability and Monitoring

Agenta provides robust observability tools that allow teams to trace every request and pinpoint failure points quickly. With the ability to turn any trace into a test with a single click, teams can monitor production systems effectively and ensure that performance remains optimal.

Use Cases of Agenta

Collaborative Prompt Development

Agenta facilitates collaborative prompt development by allowing product managers, developers, and domain experts to work together seamlessly. This reduces the silos commonly found in AI teams and enhances the quality of LLM applications through diverse input.

Real-Time Debugging

When issues arise in production, Agenta's observability features allow for real-time debugging. Teams can trace requests, identify the exact source of errors, and quickly implement fixes, ensuring minimal downtime and consistent performance.

Systematic Evaluation Processes

Using Agenta, teams can establish a systematic evaluation process that tracks results and validates changes. This is particularly useful for organizations that require a rigorous approach to testing and implementing LLMs in various applications.

Enhanced User Feedback Integration

Agenta supports user feedback integration by allowing domain experts to annotate traces and collaborate on evaluations. This feedback loop enhances the overall quality of LLM applications, ensuring they meet user needs effectively.

Frequently Asked Questions

What is LLMOps and how does Agenta fit into it?

LLMOps refers to the operational practices related to managing and deploying large language models. Agenta fits into this by providing a centralized platform that streamlines the entire workflow, from prompt management to production monitoring.

How does Agenta improve collaboration within AI teams?

Agenta improves collaboration by centralizing tools and processes, enabling product managers, developers, and experts to work together in one environment. This reduces silos and fosters communication, leading to better LLM applications.

Can Agenta be integrated with existing AI frameworks?

Yes, Agenta is designed to integrate seamlessly with popular AI frameworks and models, including LangChain and OpenAI. This flexibility allows teams to leverage their existing technology stack without being locked into a single vendor.

What types of evaluations can be conducted with Agenta?

Agenta supports a variety of evaluation types, including automated evaluations using LLM-as-a-judge, built-in evaluators, and custom evaluation code. This ensures that teams can validate their changes effectively and systematically.

You may also like:

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs